Pandu Nayak, Google’s Vice President of Search, published an article about the latest Google BERT update calling it “the biggest leap forward in the past five years, and one of the biggest leaps forward in the history of Search.” Working with their research team in the science of language and understanding, with the help of machine learning, they made significant improvements. Google will better understand search queries, i.e., user intent, returning even more relevant results.

What’s a BERT Exactly?

When you use Google Search, do you naturally speak to it or think of some clever keywords to ask it? BERT is simply a technological advancement to help search engines understand us better. When we use words like “no,” “to,” and “for,” Google will include these words as it was unable to before. This particularly helps with complex and conversational search queries and allows Google to return even more relevant results. BERT is short for …

Bidirectional Encoder Representations from Transformers

… which is a neutral network-based technique for natural language processing (NLP) pre-training. This open-source technology essentially gave developers the ability to train their own state-of-the-art question-answering systems.

How BERT Models are Applied to Search

The result of Google’s research on transformers where the BERT models process words in relation to all the other words in a sentence is the SEO game changer. In the past, they use to do it in one-by-one word order, hence, the focus on keywords. The BERT models can consider the full context of the word by analyzing the words that come before and after it. Google search is now more advanced in understanding users’ intent than ever before.

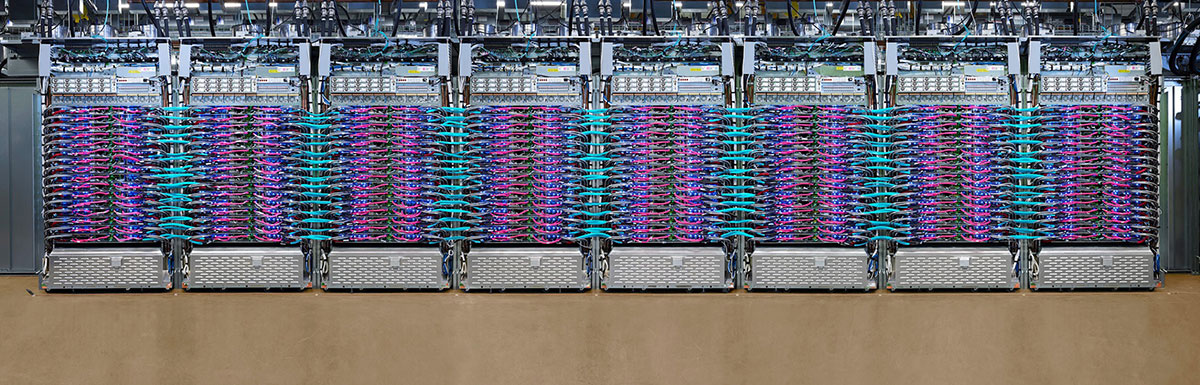

What about processing power? We would need some really beefed-up hardware just to allow these BERT models to process millions of websites and the information within them, right? For the first time ever, Google is using the latest Cloud TPU v3 Pod to deliver search results and provide information much more quickly. The Cloud TPU is the latest generation of supercomputers that Google built specifically for machine learning.

The BERT Powered Search Results

By applying BERT models to both rankings and featured snippets in Search, Google can do a much better job helping us find useful information. In fact, BERT will help Search better understand every 1 out of 10 searches particularly for longer, more conversational searches, or where prepositions like “to” and “for” impact the meaning of the word. Searching on Google will become much more natural to us like having a conversation directly with someone that can answer every question.

Seems like this is something straight out of a SciFi novel, doesn’t it? As an SEO enthusiast myself, I guess it’s time to really say goodbye to keywords. BERT was even recognized as one of the best marketing strategies to use in 2020 as it is changing user behaviors.